This article will present how you can get started using MSMQ as the communication protocol with WCF and apply message level security.

MSMQ has got some strengths compared to HTTP requests and other communication protocols:

- Requests are default durable, that is if the server is down, the client can send the messages to the server later when it is back again

- There are no responses as the protocol is one-way and sometimes avoiding a response is a gain

- All requests are queued and can be made ordered. Using transactional MSMQ queues allows requests only be sent once

- It can utilize features such as Journal, system queues such as poison messages and dead letters and integrates well with Biztalk

- It is the most resilient and sturdy communication protocol around and works well also in server-server scenarios and for clients

It also has some weaknesses:

- There are no responses, so it requires to inspect the queue(s) if something went wrong

- If there are a lot of messages, chances are that MSMQ queues will go full - it is not the quickest protocol

- Clients must also have MSMQ installed, not default set up in Windows

- There is a learning curve for developers and users as MSMQ is less known protocol compared to HTTP, HTTPS and TCP

I have created a sample to get you started with WCF and MSMQ. It will support Message-level security with self signed certificates for client and server.

First clone this repo with Git:

git clone https://toreaurstad@bitbucket.org/toreaurstad/demonetmsmqwcfgit.git

Open up the solution in Visual Studio (2017 or 2015).

First off, the solution needs to set up self signed certificates for client and server on your developer PC. Run the Script in the Scripts folder in the Host project,

the Powershell script is:

CreateCertificatesMsmqDemo.ps1

Run it as admin, as it will use Powershell to generate a new certificate, copy over Openssl.exe to c:\openssl folder (if you already have c:\openssl populated, you might want to change this)

and convert the certificate to have a RSA format instead of CNG.

There are more setup to do, such as selecting your web site in Internet Information Server admin (inetmgr) and setting up enabled protocols to http, net.msmq for the web site for this solution.

You need to install MSMQ as a feature in Windows with required subfeatures. Also after the certificates are installed, right click them (in MMC you select Local Computer and Personal certificate store)

and select Manage private keys. Now adjust security settings here so that your App Pool user can access the certificates for MSMQ.

Here is where you in Inetmgr (IIS Admin) set up enabled protocols to MSMQ for the web site of this article:

Moving on to the solution itself, I will describe the implementation details. Note that the creation of the MSMQ queue is helped programatically, as the queue cannot be created automatically by WCF.

I created a ServiceHostFactory class to create the MSMQ queue that is used in the communication between the client and server.

using System;

using System.Configuration;

using System.Messaging;

using System.ServiceModel;

using System.ServiceModel.Activation;

namespace WcfDemoNetMsmqBinding.Host

{

public class MessageQueueFactory : ServiceHostFactory

{

public override ServiceHostBase CreateServiceHost(string constructorString, Uri[] baseAddresses)

{

CreateMsmqIfMissing();

return base.CreateServiceHost(constructorString, baseAddresses);

}

public static void CreateMsmqIfMissing()

{

string queueName = string.Format(@".\private$\{0}", MessageQueueName);

if (!MessageQueue.Exists(queueName))

{

MessageQueue createdMessageQueue = MessageQueue.Create(queueName, true);

string usernName = System.Security.Principal.WindowsIdentity.GetCurrent().Name;

createdMessageQueue.SetPermissions(usernName, MessageQueueAccessRights.FullControl);

}

}

public static string MessageQueueName

{

get

{

return ConfigurationManager.AppSettings["QueueName"];

}

}

}

}

This class will create the MessageQueue if it is missing. The QueueName is an appsetting in web config.

The .svc file for the Msmq service contains a reference to the factory.

<%@ ServiceHost Language="C#" Debug="true" Service="WcfDemoNetMsmqBinding.Host.MessageQueueService" Factory="WcfDemoNetMsmqBinding.Host.MessageQueueFactory" %>

Now, the setup of the wcf service is done declaratively in web.config (it could be done in code, but I chose to use web.config for most of this sample for defining the MSMQ service). This is the

web.config that define the MSMQ service, as you can see it is not very extensive to get started with MSMQ and WCF:

<?xml version="1.0"?>

<configuration>

<appSettings>

<add key="aspnet:UseTaskFriendlySynchronizationContext" value="true" />

<add key="QueueName" value="DemoQueue3" />

</appSettings>

<system.web>

<compilation debug="true" targetFramework="4.6.1" />

<httpRuntime targetFramework="4.6.1"/>

</system.web>

<system.serviceModel>

<services>

<service name="WcfDemoNetMsmqBinding.Host.MessageQueueService" behaviorConfiguration="NetMsmqBehavior">

<endpoint contract="WcfDemoNetMsmqBinding.Host.IMessageQueueService" name="NetMsmqEndpoint"

binding="netMsmqBinding" bindingConfiguration="NetMsmq" address="net.msmq://localhost/private/DemoQueue3" />

</service>

</services>

<bindings>

<netMsmqBinding>

<binding name="NetMsmq" durable="true" exactlyOnce="true" receiveErrorHandling="Move" useActiveDirectory="False" queueTransferProtocol="Native">

<security mode="Message">

<message clientCredentialType="Certificate"/>

</security>

</binding>

</netMsmqBinding>

</bindings>

<behaviors>

<serviceBehaviors>

<behavior name="NetMsmqBehavior">

<!-- To avoid disclosing metadata information, set the values below to false before deployment -->

<serviceMetadata httpGetEnabled="true" />

<!-- To receive exception details in faults for debugging purposes, set the value below to true. Set to false before deployment to avoid disclosing exception information -->

<serviceDebug includeExceptionDetailInFaults="true"/>

<serviceCredentials>

<serviceCertificate findValue="MSMQWcfDemoserver" storeLocation="LocalMachine" storeName="My" x509FindType="FindBySubjectName" />

<clientCertificate>

<certificate findValue="MSMQWcfDemoClient" storeLocation="LocalMachine" storeName="My" x509FindType="FindBySubjectName" />

<authentication certificateValidationMode="PeerTrust" />

</clientCertificate>

</serviceCredentials>

</behavior>

</serviceBehaviors>

</behaviors>

</system.serviceModel>

<system.webServer>

<modules runAllManagedModulesForAllRequests="true"/>

<!--

To browse web app root directory during debugging, set the value below to true.

Set to false before deployment to avoid disclosing web app folder information.

-->

<directoryBrowse enabled="true"/>

</system.webServer>

</configuration>

Note that the two certificates that were generated in this sample is set up in serviceCredentials element. We define the serviceCertificae and clientCertificate here.

The client takes note to point to the same queue,

net.msmq://localhost/private/DemoQueue3

Note that this example is tested with the client and server on same machine. The client could have a local queue in case the server was on a different machine to support durability in

the scenario of many computers

The client sets up the corresponding certificates in the app.config:

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<startup>

<supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.6.1" />

</startup>

<system.serviceModel>

<bindings>

<netMsmqBinding>

<binding name="NetMsmqEndpoint">

<security mode="Message">

<message clientCredentialType="Certificate" algorithmSuite="Default" />

</security>

</binding>

</netMsmqBinding>

</bindings>

<behaviors>

<endpointBehaviors>

<behavior name="MsmqEndpointBehavior">

<clientCredentials>

<clientCertificate storeName="My" storeLocation="LocalMachine" x509FindType="FindBySubjectName"

findValue="MSMQWcfDemoClient" />

<serviceCertificate>

<defaultCertificate storeLocation="LocalMachine" storeName="My" x509FindType="FindBySubjectName" findValue="MSMQWcfDemoserver"/>

</serviceCertificate>

</clientCredentials>

</behavior>

</endpointBehaviors>

</behaviors>

<client>

<endpoint address="net.msmq://localhost/private/DemoQueue3" binding="netMsmqBinding"

bindingConfiguration="NetMsmqEndpoint" behaviorConfiguration="MsmqEndpointBehavior" contract="MessageQueueServiceProxy.IMessageQueueService"

name="NetMsmqEndpoint">

<identity>

<dns value="MSMQWcfDemoServer"/>

</identity>

</endpoint>

</client>

</system.serviceModel>

<system.web>

<compilation debug="true" />

</system.web>

</configuration>

All in all, we end up with a sample where you can communicate between a client and a service using WCF with durable and ordered requests using

NetMsmqBinding.

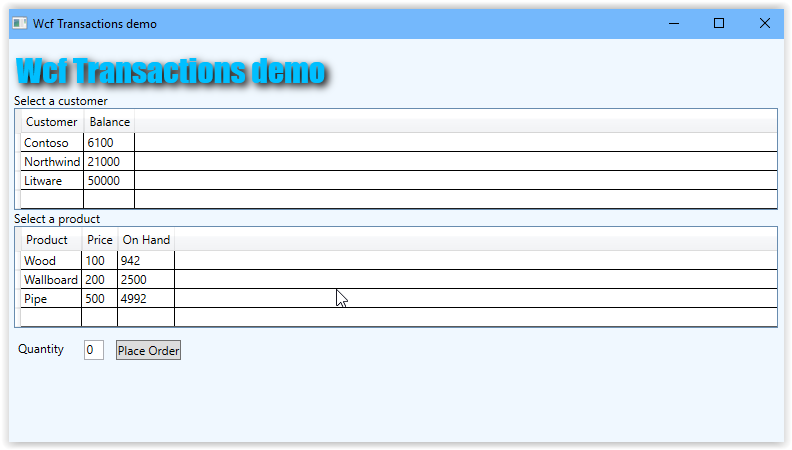

As I have presented in earlier articles, the communication is actually in the form of WCF message inside every MSMQ message. The WCF message itself is protected, as

this picture shows.

(it is protected using the self signed certificate we generated)

The Powershell script InspectMessageQueueWcfContent.ps1 is included, if you want to inspect the WCF messages themselves of the queue themselves.

Note that if you enable Journal on the MSMQ queue this solution creates, you can see the MSMQ messages after they have been received and consumed, using

compmgmt.msc

Or an alternative is to use

QueueExplorer instead, available as a trial here:

Queue Explorer

This tool can view the WCF message inside the MSMQ message as in my Powershell script, but also display syntax coloring and other functionality for quickly navigation of your MSMQ queues.

Are MSMQ in WCF an alternative for ordinary scenarios using HTTP, TCP or Federated bindings? This article was meant at least to give a demo of the capability WCF gives developers to utilize MSMQ as the communication protocol between client and server. Hope you found this article interesting.