In this article I will present code for creating added functionality to

IMemoryCache in Asp.Net Core or in Net.Core in general.

The code has been tested in Asp.Net Core 3.1. I have tested out a Generic memory cache and creating middleware for adding items and removing and listing

values. Usually you do not want to expose caching to a public api, but perhaps your api resides in a safe(r) intranet zone and you want to cache different objects.

This article will teach you the principles upon building a generic memory cache for (asp).net core and to wire up cache functionality to rest api(s).

The code of this article is available on Github:

git clone https://github.com/toreaurstadboss/GenericMemoryCacheAspNetCore.git

We start with our Generic Memory cache. It has some features:

- The primary feature is to offer generic functionality and STRONGLY TYPED access to the IMemoryCache

- Strongly typed access means you can use the cache (memory) as a repository and easily add, remove, update and get multiple items in a strongly typed fashion and

easily add compound objects (class instances or nested objects, what have you - whatever you want here (as long as it is serializable to Json would be highly suggested in case you want to use the Generic Memory Cache together with REST apis)

- You add homogenous objects of the same type to a prefixed part of the cache (by prefixed keys) to help avoid collisions in the same process

- If you add the same key twice, the item will not be added again - you must update instead

- Additional methods exists for removing, updating and clearing the memory cache.

- The Generic memory cache wraps IMemoryCache in Asp.Net Core which will do the actual caching in memory on the workstation or server in use for your application.

GenericMemoryCache.cs

using Microsoft.Extensions.Caching.Memory;

using Microsoft.Extensions.Primitives;

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Linq;

using System.Threading;

namespace SomeAcme.SomeUtilNamespace

{

/// <summary>

/// Thread safe memory cache for generic use - wraps IMemoryCache

/// </summary>

/// <typeparam name="TCacheItemData">Payload to store in the memory cache</typeparam>

/// multiple paralell importing sessions</remarks>

public class GenericMemoryCache<TCacheItemData> : IGenericMemoryCache<TCacheItemData>

{

private readonly string _prefixKey;

private readonly int _defaultExpirationInSeconds;

private static readonly object _locker = new object();

public GenericMemoryCache(IMemoryCache memoryCache, string prefixKey, int defaultExpirationInSeconds = 0)

{

defaultExpirationInSeconds = Math.Abs(defaultExpirationInSeconds); //checking if a negative value was passed into the constructor.

_prefixKey = prefixKey;

Cache = memoryCache;

_defaultExpirationInSeconds = defaultExpirationInSeconds;

}

/// <summary>

/// Cache object if direct access is desired. Only allow exposing this for inherited types.

/// </summary>

protected IMemoryCache Cache { get; }

public string PrefixKey(string key) => $"{_prefixKey}_{key}"; //to avoid IMemoryCache collisions with other parts of the same process, each cache key is always prefixed with a set prefix set by the constructor of this class.

/// <summary>

/// Adds an item to memory cache

/// </summary>

/// <param name="key"></param>

/// <param name="itemToCache"></param>

/// <returns></returns>

public bool AddItem(string key, TCacheItemData itemToCache)

{

try

{

if (!key.StartsWith(_prefixKey))

key = PrefixKey(key);

lock (_locker)

{

if (!Cache.TryGetValue(key, out TCacheItemData existingItem))

{

var cts = new CancellationTokenSource(_defaultExpirationInSeconds > 0 ?

_defaultExpirationInSeconds * 1000 : -1);

var cacheEntryOptions = new MemoryCacheEntryOptions().AddExpirationToken(new CancellationChangeToken(cts.Token));

Cache.Set(key, itemToCache, cacheEntryOptions);

return true;

}

}

return false; //Item not added, the key already exists

}

catch (Exception err)

{

Debug.WriteLine(err);

return false;

}

}

public virtual List<T> GetValues<T>()

{

lock (_locker)

{

var values = Cache.GetValues<ICacheEntry>().Where(c => c.Value is T).Select(c => (T)c.Value).ToList();

return values;

}

}

/// <summary>

/// Retrieves a cache item. Possible to set the expiration of the cache item in seconds.

/// </summary>

/// <param name="key"></param>

/// <returns></returns>

public TCacheItemData GetItem(string key)

{

try

{

if (!key.StartsWith(_prefixKey))

key = PrefixKey(key);

lock (_locker)

{

if (Cache.TryGetValue(key, out TCacheItemData cachedItem))

{

return cachedItem;

}

}

return default(TCacheItemData);

}

catch (Exception err)

{

Debug.WriteLine(err);

return default(TCacheItemData);

}

}

public bool SetItem(string key, TCacheItemData itemToCache)

{

try

{

if (!key.StartsWith(_prefixKey))

key = PrefixKey(key);

lock (_locker)

{

if (GetItem(key) != null)

{

AddItem(key, itemToCache);

return true;

}

UpdateItem(key, itemToCache);

}

return true;

}

catch (Exception err)

{

Debug.WriteLine(err);

return false;

}

}

/// <summary>

/// Updates an item in the cache and set the expiration of the cache item

/// </summary>

/// <param name="key"></param>

/// <param name="itemToCache"></param>

/// <returns></returns>

public bool UpdateItem(string key, TCacheItemData itemToCache)

{

if (!key.StartsWith(_prefixKey))

key = PrefixKey(key);

lock (_locker)

{

TCacheItemData existingItem = GetItem(key);

if (existingItem != null)

{

//always remove the item existing before updating

RemoveItem(key);

}

AddItem(key, itemToCache);

}

return true;

}

/// <summary>

/// Removes an item from the cache

/// </summary>

/// <param name="key"></param>

/// <returns></returns>

public bool RemoveItem(string key)

{

if (!key.StartsWith(_prefixKey))

key = PrefixKey(key);

lock (_locker)

{

if (Cache.TryGetValue(key, out var item))

{

if (item != null)

{

}

Cache.Remove(key);

return true;

}

}

return false;

}

public void AddItems(Dictionary<string, TCacheItemData> itemsToCache)

{

foreach (var kvp in itemsToCache)

AddItem(kvp.Key, kvp.Value);

}

/// <summary>

/// Clear all cache keys starting with known prefix passed into the constructor.

/// </summary>

public void ClearAll()

{

lock (_locker)

{

List<string> cacheKeys = Cache.GetKeys<string>().Where(k => k.StartsWith(_prefixKey)).ToList();

foreach (string cacheKey in cacheKeys)

{

if (cacheKey.StartsWith(_prefixKey))

Cache.Remove(cacheKey);

}

}

}

}

}

There are different ways of making use of the generic memory cache above. The simplest use-case would be to instantiate it in a

Controller and add cache items as wanted.

As you can see the Generic Memory cache offers strongly typed access to the memory cache.

Lets look at how we can register the Memory Cache as a service too.

startup.cs

// This method gets called by the runtime. Use this method to add services to the container.

public void ConfigureServices(IServiceCollection services)

{

services.AddControllers();

services.AddMemoryCache();

services.AddSingleton<GenericMemoryCache<WeatherForecast>>(genmen => new GenericMemoryCache<WeatherForecast>(new MemoryCache(new MemoryCacheOptions()), "WEATHER_FORECASTS", 120));

}

In the sample above we register as a

singleton (memory is either way shared so making a transient or scoped generic memory cache would be less logical)

and register the memory cache above. We can then inject it like this :

WeatherForecastController.cs

private readonly GenericMemoryCache<WeatherForecast> _genericMemoryCache;

public WeatherForecastController(ILogger<WeatherForecastController> logger, GenericMemoryCache<WeatherForecast> genericMemoryForecast)

{

_logger = logger;

_genericMemoryCache = genericMemoryForecast;

if (_logger != null)

{

}

}

This way of injecting the generic memory cache is cumbersome, since we need to have a more dynamic way of specfifying the type of the memory cache. We could register the type of the generic memory cache to object, but then we loose the strongly typing by boxing the items in the cache to object.

Instead, I have looked into defining a custom

middleware for working against the generic memory cache. Of course you would in production add some protection against this cache so it cannot be readily available for everyone, such as a token or similar to be added into the REST api calls. The middleware shown next is just a suggestion how we can build up a generic memory cache in asp.net core via rest api calls. It should be very handy in case you have consumers / clients that have data they want to store into a cache on-demand. The appliances of this could be endless in an asp.net core environment. That is if you would offer such functionality. In many cases, you would otherwise use my GenericMemoryCache more directly where needed and not expose it. But for those who want to see how it can be made available in a REST api, the following middleware offers a suggestion.

Startup.cs

// This method gets called by the runtime. Use this method to configure the HTTP request pipeline.

public void Configure(IApplicationBuilder app, IWebHostEnvironment env)

{

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

}

..

app.UseGenericMemoryCache(new GenericMemoryCacheOptions

{

PrefixKey = "volvoer",

DefaultExpirationInSeconds = 600

});

..

We call first the

UseGenericMemoryCache to just register the middlware and we initially also set up the PrefixKey to "volvoer" and default expiration in seconds to ten minutes.

But we will instead just use Postman to send some rest api calls to build up contents of the cache instead afterwards.

The

UseMiddleware extension method is used in the extension method that is added to offer this functionality:

GenericMemoryCacheExtensions.cs

using Microsoft.AspNetCore.Builder;

namespace SomeAcme.SomeUtilNamespace

{

public static class GenericMemoryCacheExtensions

{

public static IApplicationBuilder UseGenericMemoryCache<TItemData>(this IApplicationBuilder builder, GenericMemoryCacheOptions options) where TItemData: class

{

return builder.UseMiddleware<GenericMemoryCacheMiddleware<TItemData>>(options);

}

}

}

The middleware looks like this (it could be easily extended to cover more functions of the API):

GenericMemoryCacheMiddleware.cs

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Caching.Memory;

using Newtonsoft.Json;

using System;

using System.IO;

using System.Text;

using System.Threading.Tasks;

namespace SomeAcme.SomeUtilNamespace

{

public class GenericMemoryCacheMiddleware<TCacheItemData> where TCacheItemData: class

{

private readonly RequestDelegate _next;

private readonly string _prefixKey;

private readonly int _defaultExpirationTimeInSeconds;

public GenericMemoryCacheMiddleware(RequestDelegate next, GenericMemoryCacheOptions options)

{

if (options == null)

{

throw new ArgumentNullException(nameof(options));

}

_next = next;

_prefixKey = options.PrefixKey;

_defaultExpirationTimeInSeconds = options.DefaultExpirationInSeconds;

}

public async Task InvokeAsync(HttpContext context, IMemoryCache memoryCache)

{

context.Request.EnableBuffering(); //do this to be able to re-read the body multiple times without consuming it. (asp.net core 3.1)

if (context.Request.Method.ToLower() == "post") {

if (IsDefinedCacheOperation("addtocache", context))

{

// Leave the body open so the next middleware can read it.

using (var reader = new StreamReader(

context.Request.Body,

encoding: Encoding.UTF8,

detectEncodingFromByteOrderMarks: false,

bufferSize: 4096,

leaveOpen: true))

{

var body = await reader.ReadToEndAsync();

// Do some processing with body

if (body != null)

{

string cacheKey = context.Request.Query["cachekey"].ToString();

if (context.Request.Query.ContainsKey("type"))

{

var typeArgs = CreateGenericCache(context, memoryCache, out var cache);

var payloadItem = JsonConvert.DeserializeObject(body, typeArgs[0]);

var addMethod = cache.GetType().GetMethod("AddItem");

if (addMethod != null)

{

addMethod.Invoke(cache, new[] {cacheKey, payloadItem});

}

}

else

{

var cache = new GenericMemoryCache<object>(memoryCache, cacheKey, 0);

if (cache != null)

{

//TODO: implement

}

}

}

}

// Reset the request body stream position so the next middleware can read it

context.Request.Body.Position = 0;

}

}

if (context.Request.Method.ToLower() == "delete")

{

if (IsDefinedCacheOperation("removeitemfromcache", context))

{

var typeArgs = CreateGenericCache(context, memoryCache, out var cache);

var removeMethod = cache.GetType().GetMethod("RemoveItem");

string cacheKey = context.Request.Query["cachekey"].ToString();

if (removeMethod != null)

{

removeMethod.Invoke(cache, new[] { cacheKey });

}

}

}

if (context.Request.Method.ToLower() == "get")

{

if (IsDefinedCacheOperation("getvaluesfromcache", context))

{

var typeArgs = CreateGenericCache(context, memoryCache, out var cache);

var getValuesMethod = cache.GetType().GetMethod("GetValues");

if (getValuesMethod != null)

{

var genericGetValuesMethod = getValuesMethod.MakeGenericMethod(typeArgs);

var existingValuesInCache = genericGetValuesMethod.Invoke(cache, null);

if (existingValuesInCache != null)

{

context.Response.ContentType = "application/json";

await context.Response.WriteAsync(JsonConvert.SerializeObject(existingValuesInCache));

}

else

{

context.Response.ContentType = "application/json";

await context.Response.WriteAsync("{}"); //return empty object literal

}

return; //terminate further processing - return data

}

}

}

await _next(context);

}

private static bool IsDefinedCacheOperation(string cacheOperation, HttpContext context, bool requireType = true)

{

return context.Request.Query.ContainsKey(cacheOperation) &&

context.Request.Query.ContainsKey("prefix") && (!requireType || context.Request.Query.ContainsKey("type"));

}

private static Type[] CreateGenericCache(HttpContext context, IMemoryCache memoryCache, out object cache)

{

Type genericType = typeof(GenericMemoryCache<>);

string cacheitemtype = context.Request.Query["type"].ToString();

string prefix = context.Request.Query["prefix"].ToString();

Type[] typeArgs = {Type.GetType(cacheitemtype)};

Type cacheType = genericType.MakeGenericType(typeArgs);

cache = Activator.CreateInstance(cacheType, memoryCache, prefix, 0);

return typeArgs;

}

}

}

The middleware above for now supports adding items to the cache and removing them or listing them up.

I have used this busines model to test it out:

namespace GenericMemoryCacheAspNetCore.Models

{

public class Car

{

public Car()

{

NumberOfWheels = 4;

}

public string Make { get; set; }

public string Model { get; set; }

public int NumberOfWheels { get; set; }

}

}

The following requests were tested to add three cars and then delete one and then list them up:

# add three cars

POST https://localhost:44391/caching/addcar?addtocache&prefix=volvoer&cachekey=240&type=GenericMemoryCacheAspNetCore.Models.Car,GenericMemoryCacheAspNetCore

POST https://localhost:44391/caching/addcar?addtocache&prefix=volvoer&cachekey=Amazon&type=GenericMemoryCacheAspNetCore.Models.Car,GenericMemoryCacheAspNetCore

POST https://localhost:44391/caching/addcar?addtocache&prefix=volvoer&cachekey=Pv&type=GenericMemoryCacheAspNetCore.Models.Car,GenericMemoryCacheAspNetCore

#remove one

DELETE https://localhost:44391/caching?removeitemfromcache&prefix=volvoer&cachekey=Amazon&&type=GenericMemoryCacheAspNetCore.Models.Car,GenericMemoryCacheAspNetCore

# list up the cars in the cache (items)

GET https://localhost:44391/caching/addcar?getvaluesfromcache&prefix=volvoer&type=GenericMemoryCacheAspNetCore.Models.Car,GenericMemoryCacheAspNetCore

About the POST, I have posted payloads in the body via postman such as this:

{

Make: "Volvo",

Model: "Amazon"

}

Finally, we can see that we get the cached data in our generic memory cache. As you can see, the REST api specifies the type arguments by specifying the type name with namespaces and after the comma, also the asembly name (fully qualified type name). So this way of building a generic memory cache via rest api is fully feasible in asp.net core. However, it should only be used in scenarios where such functionality is desired and the clients can be trusted in some way (or by restricing access to such functionality only to priviledged users via a token or other functionality.) You would of course never allow clients to just send over data to a server's memory cache only to see it bogged down by memory. That was not the purpose of this article. The purpose was to acquaint the reader more with IMemoryCache, Generic Memory cache and middlware in Asp.Net Core. A generic memory cache will give you strongly typed access to memory cache in asp.net core and the concepts shown here in .net core should be similar.

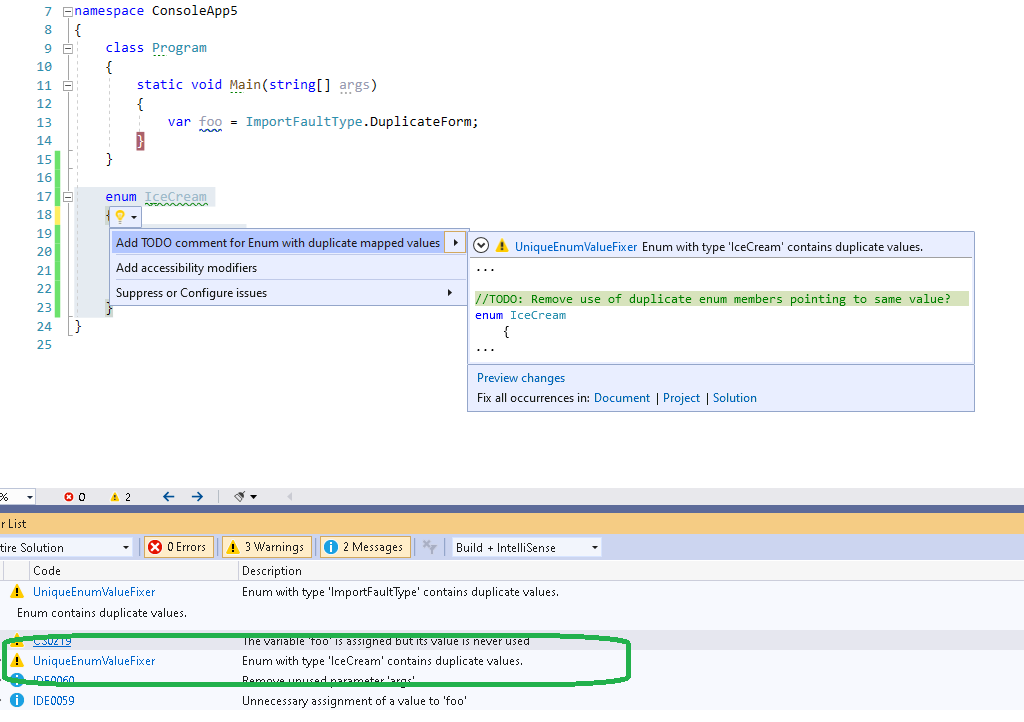

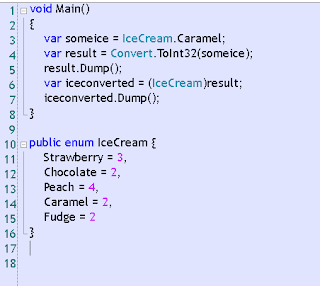

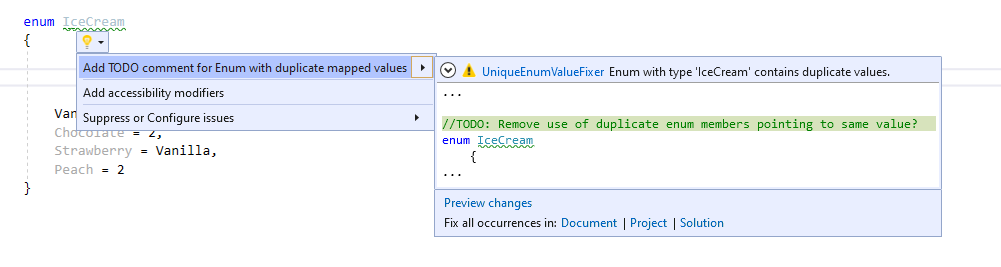

The following sample code shows a violation of the rule:

The following sample code shows a violation of the rule: