https://github.com/toreaurstadboss/ImageClassificationMLNetBlazorDemo

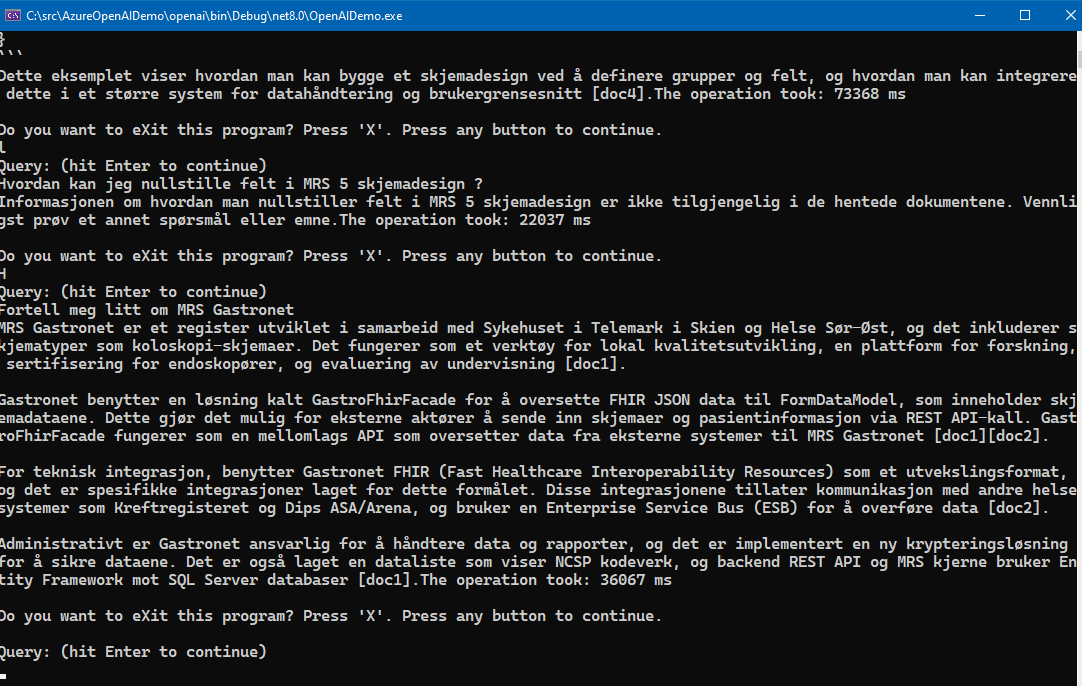

A screenshot shows the application running below :

ML.Net is Microsoft's machine learning library. It is combined with tooling inside VS 2022 an easy way to locally use machine learning models on your CPU or GPU, or hosted in Azure cloud services. The website for ML.Net is available here for more information about ML.Net and documentation:

https://dotnet.microsoft.com/en-us/apps/ai/ml-dotnet

In the demo above I have trained the model to recognize either horses or mooses. These species are both mammals and herbivores and somewhat are similar in appearance. I have trained the machine learning model in this demo only with ten images of each category, then again with ten other test images that checks if the model recognizes correctly if we see a horse or a moose. Already with just ten images, it did not miss once, and of course a better example for a real world machine learning model would have scoured over tens of thousand of images to handle all edge cases. ML.Net is very easy to run, it can be run locally on your own machine, using the CPU or GPU. The GPU must be CUDA compatible. That actually means you need a NVIDIA card with 8-series. I got such a card on a laptop of mine and have tested it. The following links points to download pages of NVIDIA for downloading the necessary software as of March 2025 to run ML.Net image classification functionality on GPUs :

Download Cuda 10.1

Cuda 10.1 can be downloaded from here: https://developer.nvidia.com/cuda-10.1-download-archive-baseCuDnn 7.6.4

CuDnn can be downloaded from here: https://developer.nvidia.com/rdp/cudnn-archive

Getting started with image classification using ML.Net

It is easiest to use VS 2022 to add a ML.Net machine learning model. Inside VS 2022, right click your project and choose Add and choose Machine Learning Model In case you do not see this option, hit the start menu and type in Visual Studio installer Now, hit the button Modify for your VS installation. Choose Individual Components Search for 'ml'. Select the ML.NET Model Builder. There are also a package called ML.NET Model Builder 2022, I also chose that.Choosing the scenario

Now, after adding the Machine Learning model, the first page asks for a scenario. I choose Image Classification here, below Computer Vision scenario category.

Choosing the environment

Then I hit the button Local. In the next step, I select Local (CPU). Note that I have tested also Nvidia Cuda-compatible graphics card / GPU on another laptop and it also worked great and should be preferred if you have a GPU compatible and have installed Cuda 10.1 and Cdnn 7.6.4 as shown in links above.

Hit the button Next Step.

Choosing the Data

It is time to train the machine learning model with data ! I have gathered ten sample images of mooses and horses each. By pointing to a folder with images where each category of images are gathered in subfolders of this folder.

Next step is Train

Training the model

Here you can hit the button Train again. When you have trained enough here the model, you can hit the button Next step . Training the machine learning will take some time depending on you using CPU or GPU and the number of input images here. Usually it takes a few seconds, but not many minutes to churn through a couple of images as shown here, 20 images in total.Loading up the image data and using the machine learning model

Note that ML.Net demands support to renderinteractive rendering of web apps, pure Blazor WASM apps are not supported. The following file shows how the Blazor serverside app is set up.Program.cs

using ImageClassificationMLNetBlazorDemo.Components;

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

builder.Services.AddRazorComponents()

.AddInteractiveServerComponents();

var app = builder.Build();

// Configure the HTTP request pipeline.

if (!app.Environment.IsDevelopment())

{

app.UseExceptionHandler("/Error", createScopeForErrors: true);

// The default HSTS value is 30 days. You may want to change this for production scenarios, see https://aka.ms/aspnetcore-hsts.

app.UseHsts();

}

app.UseHttpsRedirection();

app.UseStaticFiles();

app.UseAntiforgery();

app.MapRazorComponents<App>()

.AddInteractiveServerRenderMode();

app.Run();

InteractiveServer is set up inside the App.razor using the HeadOutlet.

App.razor

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<base href="/" />

<link rel="stylesheet" href="app.css" />

<link rel="stylesheet" href="lib/bootstrap/css/bootstrap.min.css" />

<link rel="stylesheet" href="ImageClassificationMLNetBlazorDemo.styles.css" />

<link rel="icon" type="image/png" href="favicon.png" />

<HeadOutlet @rendermode="InteractiveServer" />

</head>

<body>

<Routes @rendermode="InteractiveServer" />

<script src="_framework/blazor.web.js"></script>

</body>

</html>

The following codebehind of the razor component Home.razor in the demo repo shows how a file uploaded using the InputFile control in Blazor serverside.

Home.razor.cs

@code {

private string? _base64ImageSource = null;

private string? _predictedLabel = "No classification";

private IOrderedEnumerable<KeyValuePair<string, float>>? _predictedLabels = null;

private int? _assessedPredictionQuality = null;

private string? _errorMessage = null;

private async Task LoadFileAsync(InputFileChangeEventArgs e)

{

try

{

ResetPrivateFields();

if (e.File.Size <= 0 || e.File.Size >= 2 * 1024 * 1024)

{

_errorMessage = "Sorry, the uploaded image but be between 1 byte and 2 MB!";

return;

}

byte[] imageBytes = await GetImageBytes(e.File);

_base64ImageSource = GetBase64ImageSourceString(e.File.ContentType, imageBytes);

PredictImageClassification(imageBytes);

}

catch (Exception err)

{

Console.WriteLine(err);

}

}

private void ResetPrivateFields()

{

_base64ImageSource = null;

_predictedLabel = null;

_predictedLabels = null;

_assessedPredictionQuality = null;

}

private int GetAssesPrediction()

{

int result = 1;

if (_predictedLabel != null && _predictedLabels != null)

{

foreach (var label in _predictedLabels)

{

if (label.Key == _predictedLabel)

{

result = label.Value switch

{

<= 0.50f => 1,

<= 0.70f => 2,

<= 0.80f => 3,

<= 0.85f => 4,

<= 0.90f => 5,

<= 1.0f => 6,

_ => 1 //default to dice we get some other score here..

};

}

}

}

return result;

}

private void PredictImageClassification(byte[] imageBytes)

{

var input = new ModelInput

{

ImageSource = imageBytes

};

ModelOutput output = HorseOrMooseImageClassifier.Predict(input);

_predictedLabel = output.PredictedLabel;

_predictedLabels = HorseOrMooseImageClassifier.PredictAllLabels(input);

_assessedPredictionQuality = GetAssesPrediction(); //check how good the prediction is, give a score from 1-6 (dice score!)

StateHasChanged();

}

private async Task<byte[]> GetImageBytes(IBrowserFile file)

{

using MemoryStream memoryStream = new();

var stream = file.OpenReadStream(2 * 1024 * 1024, CancellationToken.None);

await stream.CopyToAsync(memoryStream);

return memoryStream.ToArray();

}

private string GetBase64ImageSourceString(string contentType, byte[] bytes)

{

string preAmble = $"data:{contentType};base64,";

return $"{preAmble}{(Convert.ToBase64String(bytes))}";

}

}

As the code shows above, using the machine learning model is quite convenient, we just use the methods Predict to get the Label that is decided exists in the loaded image. This is the image classiciation that the machine learning found. Note that using the method PredictAllLabels get the confidence of the different labels show in this demo.

There are no limitations on the number of categories here in the image classification labels that one could train a model to look after.

A benefit with ML.Net is the option to use it on-premise servers and get fairly good result on just a few sample images. But the more sample images you obtain for a label, the more precise the machine learning model will become. It is possible to download a pre-trained model such as Inceptionv3 that is compatible with Tensorflow used here that supports up to 1000 categories.

More information is available here from Microsoft about using a pre-trained model such as InceptionV3:

https://learn.microsoft.com/en-us/dotnet/machine-learning/tutorials/image-classification

Please note that the Free tier of Azure AI Search is rather slow and seems to only allow queryes at a certain interval, it will suffice to just test it out. To really test it out in for example an Intranet scenario, the standard tier Azure AI search service is recommended, at about 250 USD per month as noted.

Please note that the Free tier of Azure AI Search is rather slow and seems to only allow queryes at a certain interval, it will suffice to just test it out. To really test it out in for example an Intranet scenario, the standard tier Azure AI search service is recommended, at about 250 USD per month as noted.