https://github.com/toreaurstadboss/SemanticKernelPluginDemov4

The demo code is a Blazor server app. It demonstrates how to use Microsoft Semantic Kernel with plugins. I have decided to provide the Northwind database as the extra data the plugin will use. Via debugging and seeing the output, I see that the plugin is successfully called and used. It is also easy to add plugins, which provides additional data to the AI model. This is suitable for providing AI powered solutions with private data that you want to provide to the AI model. For example, when using OpenAI Chat GPT-4, providing a plugin will make it possible to specify which data are to be presented and displayed. It is a convenient way to provide a natural language interface for doing data reporting such as listing up results from this plugin. The plugin can provide kernel functions, using attributes on the method. Let's first look at the Program.cs file for wiring up the semantic kernel for a Blazor Server demo app.

Program.cs

using Microsoft.EntityFrameworkCore;

using Microsoft.SemanticKernel;

using SemanticKernelPluginDemov4.Models;

using SemanticKernelPluginDemov4.Services;

namespace SemanticKernelPluginDemov4

{

public class Program

{

public static void Main(string[] args)

{

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

builder.Services.AddRazorPages();

builder.Services.AddServerSideBlazor();

// Add DbContext

builder.Services.AddDbContextFactory<NorthwindContext>(options =>

options.UseSqlServer(builder.Configuration.GetConnectionString("DefaultConnection")));

builder.Services.AddScoped<IOpenAIChatcompletionService, OpenAIChatcompletionService>();

builder.Services.AddScoped<NorthwindSemanticKernelPlugin>();

builder.Services.AddScoped(sp =>

{

var kernelBuilder = Kernel.CreateBuilder();

kernelBuilder.AddOpenAIChatCompletion(modelId: builder.Configuration.GetSection("OpenAI").GetValue<string>("ModelId")!,

apiKey: builder.Configuration.GetSection("OpenAI").GetValue<string>("ApiKey")!);

var kernel = kernelBuilder.Build();

var dbContextFactory = sp.GetRequiredService<IDbContextFactory<NorthwindContext>>();

var northwindSemanticKernelPlugin = new NorthwindSemanticKernelPlugin(dbContextFactory);

kernel.ImportPluginFromObject(northwindSemanticKernelPlugin);

return kernel;

});

var app = builder.Build();

// Configure the HTTP request pipeline.

if (!app.Environment.IsDevelopment())

{

app.UseExceptionHandler("/Error");

// The default HSTS value is 30 days. You may want to change this for production scenarios, see https://aka.ms/aspnetcore-hsts.

app.UseHsts();

}

app.UseHttpsRedirection();

app.UseStaticFiles();

app.UseRouting();

app.MapBlazorHub();

app.MapFallbackToPage("/_Host");

app.Run();

}

}

}

In the code above, note the following:

- The usage of IDbContextFactory for creating a db context, injected into the plugin. This is a Blazor server app, so this service is used to create db contet, since a Blazor server will have a durable connection between client and the server over Signal-R and there needs to use this interface to create dbcontext instances as needed

- Using the method ImportPluginFromObject to import the plugin into the semantic kernel built here. Note that we register the kernel as a scoped service here. Also the plugin is registered as a scoped service here.

NorthwindSemanticKernelplugin.cs

using Microsoft.EntityFrameworkCore;

using Microsoft.SemanticKernel;

using SemanticKernelPluginDemov4.Models;

using System.ComponentModel;

namespace SemanticKernelPluginDemov4.Services

{

public class NorthwindSemanticKernelPlugin

{

private readonly IDbContextFactory<NorthwindContext> _northwindContext;

public NorthwindSemanticKernelPlugin(IDbContextFactory<NorthwindContext> northwindContext)

{

_northwindContext = northwindContext;

}

[KernelFunction]

[Description("When asked about the suppliers of Nortwind database, use this method to get all the suppliers. Inform that the data comes from the Semantic Kernel plugin called : NortwindSemanticKernelPlugin")]

public async Task<List<string>> GetSuppliers()

{

using (var dbContext = _northwindContext.CreateDbContext())

{

return await dbContext.Suppliers.OrderBy(s => s.CompanyName).Select(s => "Kernel method 'NorthwindSemanticKernelPlugin:GetSuppliers' gave this: " + s.CompanyName).ToListAsync();

}

}

[KernelFunction]

[Description("When asked about the total sales of a given month in a year, use this method. In case asked for multiple months, call this method again multiple times, adjusting the month and year as provided. The month and year is to be in the range 1-12 for months and for year 1996-1998. Suggest for the user what the valid ranges are in case other values are provided.")]

public async Task<decimal> GetTotalSalesInMontAndYear(int month, int year)

{

using (var dbContext = _northwindContext.CreateDbContext())

{

var sumOfOrders = await (from Order o in dbContext.Orders

join OrderDetail od in dbContext.OrderDetails on o.OrderId equals od.OrderId

where o.OrderDate.HasValue && (o.OrderDate.Value.Month == month

&& o.OrderDate.Value.Year == year)

select (od.UnitPrice * od.Quantity) * (1 - (decimal)od.Discount)).SumAsync();

return sumOfOrders;

}

}

}

}

In the code above, note the attributes used. KernelFunction tells that this is a method the Semantic kernel can use. The description attribute instructs the AI LLM model how to use the method, how to provide parameter values if any and when the method is to be called.

Let's look at the OpenAI service next.

OpenAIChatcompletionService.cs

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

namespace SemanticKernelPluginDemov4.Services

{

public class OpenAIChatcompletionService : IOpenAIChatcompletionService

{

private readonly Kernel _kernel;

private IChatCompletionService _chatCompletionService;

public OpenAIChatcompletionService(Kernel kernel)

{

_kernel = kernel;

_chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

}

public async IAsyncEnumerable<string?> RunQuery(string question)

{

var chatHistory = new ChatHistory();

chatHistory.AddSystemMessage("You are a helpful assistant, answering only on questions about Northwind database. In case you got other questions, inform that you only can provide questions about the Northwind database. It is important that only the provided Northwind database functions added to the language model through plugin is used when answering the questions. If no answer is available, inform this.");

chatHistory.AddUserMessage(question);

await foreach (var chatUpdate in _chatCompletionService.GetStreamingChatMessageContentsAsync(chatHistory, CreateOpenAIExecutionSettings(), _kernel))

{

yield return chatUpdate.Content;

}

}

private OpenAIPromptExecutionSettings? CreateOpenAIExecutionSettings()

{

return new OpenAIPromptExecutionSettings

{

ToolCallBehavior = ToolCallBehavior.AutoInvokeKernelFunctions

};

}

}

}

In the code above, the kernel is injected into this service. The kernel was registered in Program.cs as a scoped service, so it is injected here. The method GetRequiredService is similar to

the method with same name of IServiceProvider used inside Program.cs.

Note the use of ToolCallBehavior set to AutoInvokeKernelFunctions.

Extending the AI powered functionality with plugins requires little extra code with Microsoft Semantic kernel.

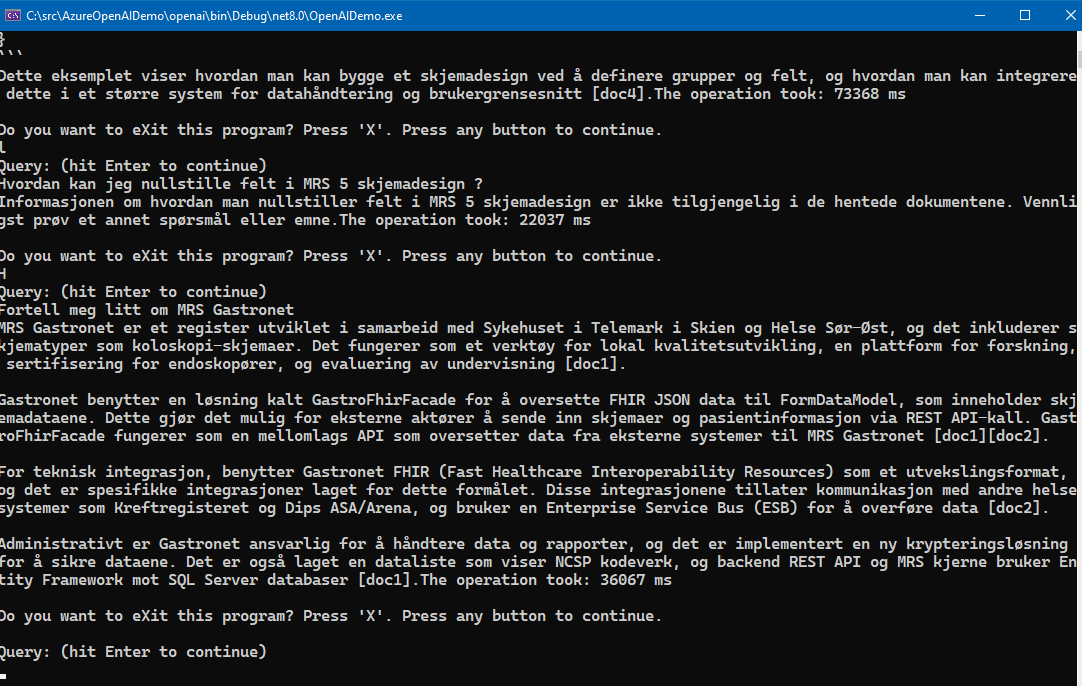

A screenshot of the demo is shown below.

Please note that the Free tier of Azure AI Search is rather slow and seems to only allow queryes at a certain interval, it will suffice to just test it out. To really test it out in for example an Intranet scenario, the standard tier Azure AI search service is recommended, at about 250 USD per month as noted.

Please note that the Free tier of Azure AI Search is rather slow and seems to only allow queryes at a certain interval, it will suffice to just test it out. To really test it out in for example an Intranet scenario, the standard tier Azure AI search service is recommended, at about 250 USD per month as noted.